New Quantization Approach for Learned Image Compression

As a result of the ever-increasing amounts of data required for the use of autonomous driving systems, the field of data compression is also playing an increasingly important role. The consortium partners of the KI Data Tooling project have now dedicated a new publication to this topic.

Due to increasing data volumes in autonomous driving (for example, resulting from higher frame rates as well as a higher number of sensors used), there is a need to develop new and efficient compression algorithms in order not to overload storage media and bus systems in the vehicle.

A recently published approach that was presented at CVPR's Challenge on Learned Image Compression (CLIC) workshop on June 19 addresses a specific part of an image compression framework, namely quantization. In learned image compression, an autoencoder architecture is used that first reduces the spatial resolution of the image in the encoder (the resulting data representation is called latent space) and then returns it to its original form in the decoder to reconstruct the image during decompression. The spatial reduction already reduces the size of the latent space. In addition, the resolution of the latent space's range of values is further reduced by discretization (quantization), which again reduces the size. Since the latent space is then discrete, entropy coding can be used as a third step to exploit redundancies that may still be present in the data to again reduce the required size of the generated bit sequences. These bit sequences are finally stored on disk, for example, or sent via a transmission channel.

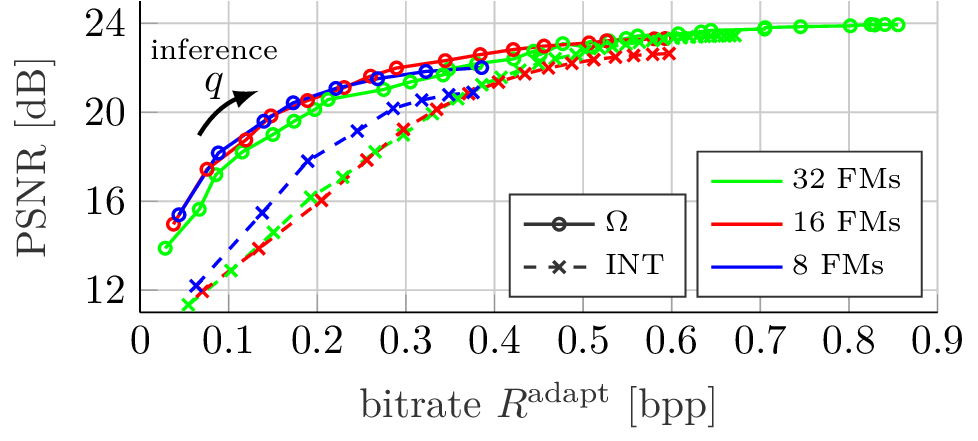

An important difference to the well-established baseline method is that the novel approach uses vector quantization (which in principle leads to lower quantization errors than scalar quantization) and that it does not require a codebook. The publication also shows how the quantization approach can be used with a feature map masking mechanism to adjust the bit rate after training the model during inference. This is particularly interesting in practice because it means only one single model has to be trained instead of one model per bitrate. This would also be possible with the baseline method, however the proposed quantizer can better adapt to the use case of adaptive bitrate compression.

As part of the complete data solution being developed in KI Data Tooling, the research and development activities in learned image compression plays a major role in the project. The new release thus provides an important building block for achieving the project goals.

Image: KI Data Tooling